How to automate creative testing (Free Google Spreadsheets template)

Overview

What is Creative Testing?

Creative Testing is a collection of methods for improving the performance of marketing campaigns by A/B testing different combinations of images, videos, and text. Discovering what most resonates with your audience is one of the most effective ways to improve the return on investment (ROI) of your marketing campaigns.

Taking the risk out of the creative process

Every marketing campaign, whether it’s advertising on Instagram or sending an email, requires you to design creative and write copy. Normally this is outsourced to designers and copywriters, who tend to have zero visibility into what’s actually performing against your marketing goals. The only way to truly measure the success of your campaigns and improve your results is to run an A/B test, where some randomly assigned group gets a different version, so you can see how it affects their behavior. Knowing what’s proven to perform makes you more likely to hit upon multiple winners, and drive results for your business.

We ran over 8,000 creative experiments at Ladder, the agency I co-founded, and consistently we found the hardest part was planning. If you don’t run the numbers ahead of time to know how many variations you can test, or what it’s going to cost to get a statistically significant test, you risk getting an inconsistent result, and wasting time testing insignificant changes. I made this template to automate those calculations and make it easier to prioritize which variations you plan to test. It also helpfully gives you IDs for each creative and message variant, which you can use to make sure your naming conventions for campaign setup and analytics are solid.

Creative Testing Template in Google Spreadsheets

In this tutorial you will learn how to calculate the cost of running A/B tests based on the number of variations of creative and messaging you need to test, with a simple (ready-to-go) template. We called our creative testing process ‘performance branding’, to distinguish between the normal opinion-driven process of brand building and the data-driven process of scientifically testing what works (or doesn’t) using this template.

I built this version of the template as part of writing my book “Marketing Memetics: Reverse-Engineering Creativity To Drive Brand Performance”. This book is the guide I wish I had back when I was running my agency, that I could give to every technical and analytical member of the team to give them a framework for navigating the ‘fluffy’ world of creative and branding. The template includes a test duration calculator, to determine how long you need to run an experiment in order to reach statistical significance. It then figures out what that would cost based on the benchmarks you input, and spits out the different creative and messaging combinations, complete with unique IDs and naming conventions.

https://docs.google.com/spreadsheets/d/1xUhjUfQxc0GeSH9jtof4is0GZo72k85TSYfmJY8JIoE/copy

Feel free to use the template in your own testing process by clicking the link below and creating a copy to input your own data into. If you’re modifying this template for use in creating your own blog posts or videos, all we ask is that you please provide proper attribution back to marketingmemetics.com.

> Creative Testing Template

> Explainer Video

If you are interested in this topic and want to learn more about how to not just test creative, but come up with more successful creative ideas in the first place, sign up for updates here:

Table of Contents

How to run a creative testing process with this template for a mobile game:

- Equation and method

- 3-Step Process

- Limitations of this template

- Frequently asked questions

How to calculate test duration and cost in Google Spreadsheets

Equation and method

For this template we use a relatively simple calculation in GSheets to figure out how long a test should run for. It takes the conversion rate and effect size and calculates the number of observations needed per test variation.

26*POWER(SQRT(cvr*(1-cvr))/(cvr*effect),2)

We can multiply that by the number of variations (including the control) which we get from counting the names in the creative and messaging columns. Next up we figure out how much it would cost to run that test, based on your conversion rate and cost per conversion, in order to display the full budget calculation.

3-Step Process

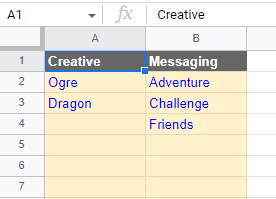

Step 1: Enter all the creative and messaging variants you plan to test on the left side of the spreadsheet, consecutively with no gaps. Add as many ideas as you like, as you can always go back and delete some later when prioritizing.

Step 2: The number of variations is calculated for you, but you need to add assumptions for the Conversion Rate, Cost per Conversion, and Expected Effect Size. Conversion rate is events / impressions, but choose wisely: lower conversion rate means higher cost when it comes to running the test. For example it’s cheaper to test based on clickthrough rate than it is to test all the way through to install, as you need fewer observations to get a significant test. Cost per Conversion is also cheaper higher up the funnel, which massively impacts the overall cost of the test. The Expected Effect Size you should keep at 20%, which is the historical average based on 8,000 tests we ran at my agency, but adjust it upwards if you’re really confident each variation is a massive change over the other.

Step 3: Now you might want to go back and adjust the variants until the test comes under budget, but when it does, you have a ready-made testing grid that assigns a different ID to every creative and message, as well as each potential combination. It’s important to use these IDs when setting up your campaign, for example in the names of the ads as well as in the UTM parameters you put on the URLs, so you can later come back and report on performance without any mistakes or issues. Once you see this grid, you might realize that some combinations just don’t work together: that’s fine! Feel free to delete combinations and adjust the number of variants as you see fit, in order to run a leaner test.

Limitations of this template

This template uses a relatively simplistic calculation for figuring out test duration which is good enough for making tradeoffs in the vast majority of cases, but a statistician will no doubt suggest is incorrect in some cases. I personally like to think in terms of Bayesian statistics, where you get not just a point estimate but a range of probabilities, and can make a judgment call more intuitively. However this is computationally intense and therefore hard to do in a spreadsheet.

If you want to get more advanced, you can also cut the number of experiments you need to run considerably by using Taguchi’s ‘Orthogonal Array’ testing, which drops similar combinations and helps you get results much faster and for less money. Unfortunately also hard to do in GSheets, and hard to explain in a few short paragraphs, hence its exclusion here.

Frequently asked questions about Creative Testing

Why should I do creative testing?

With more and more of marketing getting automated, deciding what creative to test is really the only major growth lever left. Most of the targeting is done by Google and Meta automatically, as is budget allocation and optimization of campaigns. However creative testing can still yield an order of magnitude (10x) improvement over past performance in some cases. Even when AI, like ChatGPT and DALL-E, also automates the actual creation of messaging and creative assets, there will still be a premium on deciding what to test.

What factors increase the cost of a test?

Reaching statistical significance requires a number of observations that rises or falls based on several factors. It’s a function of how big the expected effect size is, as well as what the conversion rate is. However the biggest factor is the number of variations, which increases exponentially for each new creative or messaging variant you add to the list. This template was designed to help you calculate the trade-off between wanting to test everything, and what’s actually realistic to test given your budget.

Why not just let Meta or Google optimize which ad gets shown?

That is actually my recommendation for all but the biggest existential problems that justify the high cost of testing. Most creative refreshes and small changes should be placed in the same ad-set or ad-group along with the existing winners, and let the algorithm decide. Even then, most creative decision making is going to have to be opinion-driven: there are far too many potential combinations of ideas and most of those ideas obviously won’t work. My recommendation is to pass these decisions over to a high-judgment individual or small team you can trust, while also feeding the data from the things you do split test back to them, so they can continue to train their gut on what’s working.

How do I brainstorm creative ideas in the first place?

The way most ‘creative’ people like designers or copywriters do it, is they draw from their experience and what they know about the brand, and synthesize to generate new ideas that have a high chance of working. However if you don’t have that experience, or haven’t developed your eye for design or good writing, you can brute force that process with something I call ‘meme mapping’. Build a swipefile containing more samples than anyone else, and comb through it looking for repeating patterns – memes – that correlate with good or bad performance. You can then choose what to copy and where to innovate from there.

Send us your feedback

Your feedback helps us constantly improve and update our current use cases, or in creating new use cases as per demand. If you have any questions regarding this use case, or would like to have a further discussion on the topic, then reach out to us at info@growthfullstack.com.

Michael Taylor

https://getrecast.com/